Technical SEO for Developers: a Checklist to Help You Rank Higher on Google

Many SEO strategies can be employed by anybody, but a few tactics benefit from the ability to access and understand a website’s code…

Published on

Mar 5, 2019

Read time

11 min read

Introduction

Many SEO strategies can be employed by anybody, but a few tactics benefit from the ability to access — and understand — a website’s code base. These are the steps a developer can take to make sure as many people discover their website as possible.

SEO or “Search Engine Optimisation” is the process of making sure a website ranks as highly as possible in the unpaid search results of Google and Bing, as well as other search engines, like China’s Baidu or Russia’s Yandex.

Each of these search engines uses it’s own set of algorithms to rank websites whenever somebody searches for a word or phrase. The algorithms are kept secret because — apart from allowing other companies to mimic their technology — they don’t want website owners to game the system, and rank higher simply because they’ve employed a specific tactic.

In an ideal world, only the best and most relevant content would rank first for every search. However, this is no simple task, not least because it’s difficult for algorithms to understand a website’s content without a few pointers. Many of these pointers come from the content itself, but a few fall into the remit of developers: that’s where technical SEO comes in.

The Checklist

The following checklist gives you a quick view of the steps you could take to improve your site’s technical SEO. In the rest of the article, we’ll take a more detailed look at each strategy.

We’ll now look into why these steps are important for SEO and how to implement each one, starting with Google and Microsoft’s core tools for understanding and optimising your search data.

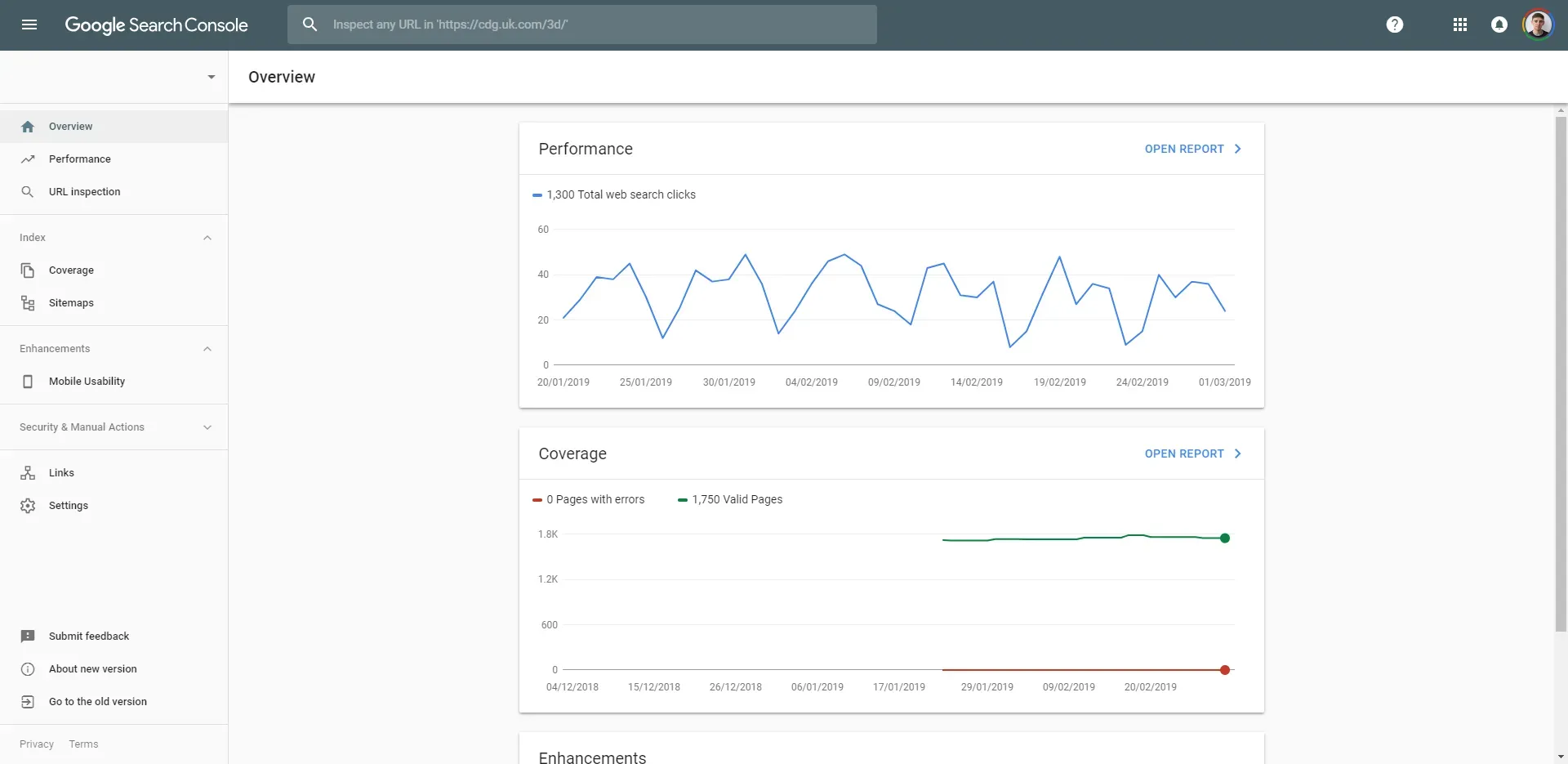

Set-up Google Search Console

Google Search Console is useful for several reasons:

- It lets you know how many unpaid clicks your website is getting.

- It draws attention to any errors in your site, which may be negatively affecting your place in search results.

- It checks that every page on your site is correctly optimised for mobile.

- And it allows you to upload XML sitemaps, which helps Google understand the navigation of your site and display section previews in search results. We’ll discuss this in greater detail below.

To set-up Google Search Console, click the link here.

Once you’ve signed up, you’ll be given a unique code to place within the head tags of your site, like this:

<!-- Google Search Console -->

<meta

name="google-site-verification"

content="+nxGUDJ4QpAZ5l9Bsjdi102tLVC21AIh5d1Nl23908vVuFHs34="

/>

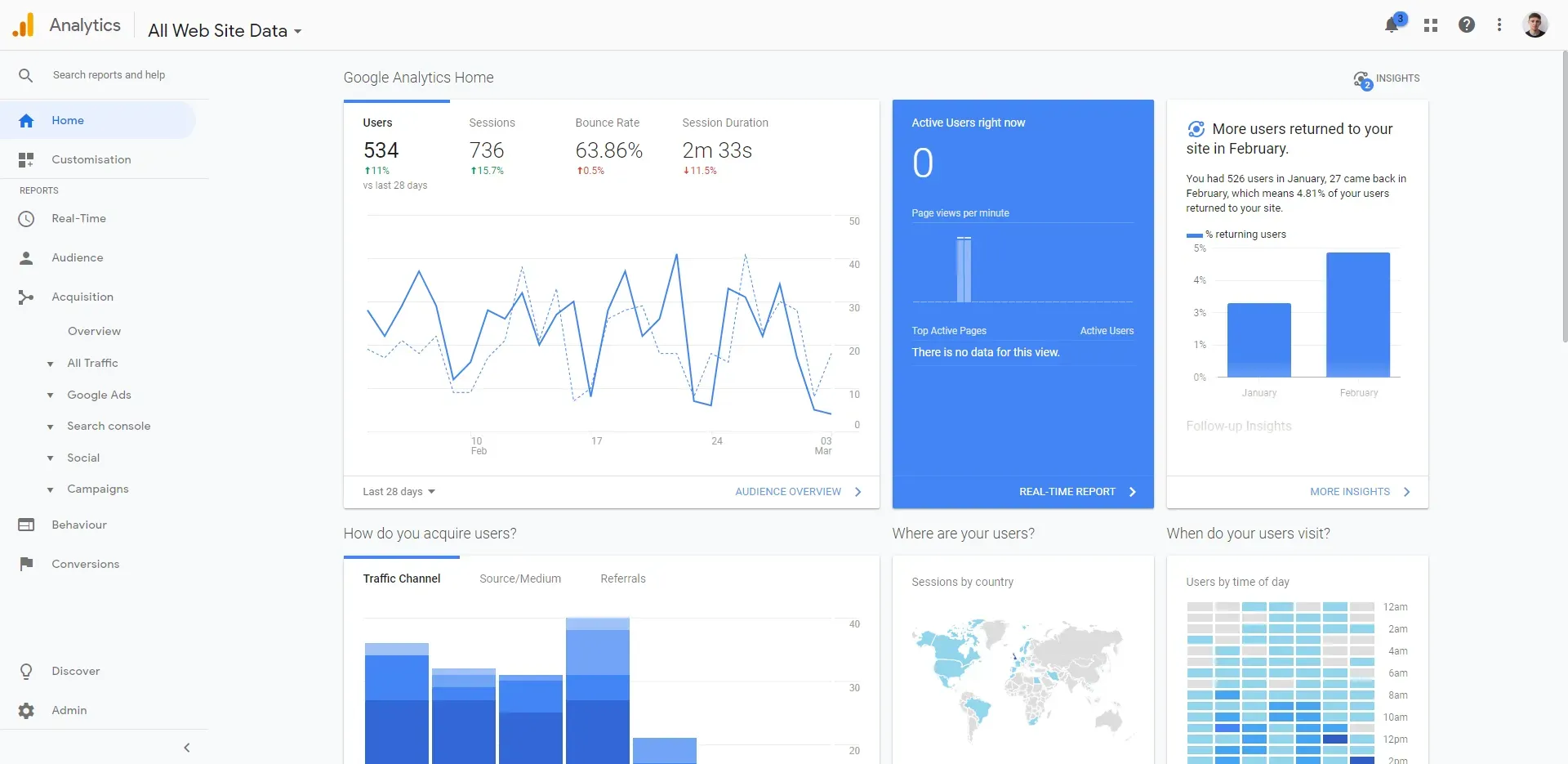

Set-up Google Analytics

Google Analytics is the de facto tool for understanding your website’s traffic. It has a huge amount of useful features, which help you understand more about who your audience are, how they’re discovering your site, and what they’re doing when they’re on it.

Once you’ve set it up, anyone — developer or otherwise — can make optimisations based on the data collected by Google Analytics, not only for SEO purposes, but for broader marketing objectives like improving conversions.

To set-up Google Analytics, click the link here.

If you’re not sure what option to choose, the most common is gtag.js. To set this up, you need to insert a script tag immediately after your site’s closing /head tag, as below. Replace GA_TRACKING_ID with your unique ID.

<!-- Global Site Tag (gtag.js) - Google Analytics -->

<script

async

src="https://www.googletagmanager.com/gtag/js?id=GA_TRACKING_ID"

></script>

<script>

window.dataLayer = window.dataLayer || [];

function gtag() {

dataLayer.push(arguments);

}

gtag("js", new Date());

gtag("config", "GA_TRACKING_ID");

</script>

A screenshot of the Google Analytics dashboard, showing data from another website I manage.

Optional: Connect Google Analytics and Google Search Console

By default, Google Analytics and Google Search Console are separate services, but combining them allows you to see data from Search Console inside your Analytics dashboard.

In a few steps, you can bring them together:

- Sign-in to your Google Analytics account.

- On the left sidebar, click on Acquisition, then Search Console.

- Click the button called “Set up Search Console data sharing”.

- Choose your Search Console account, and click Save.

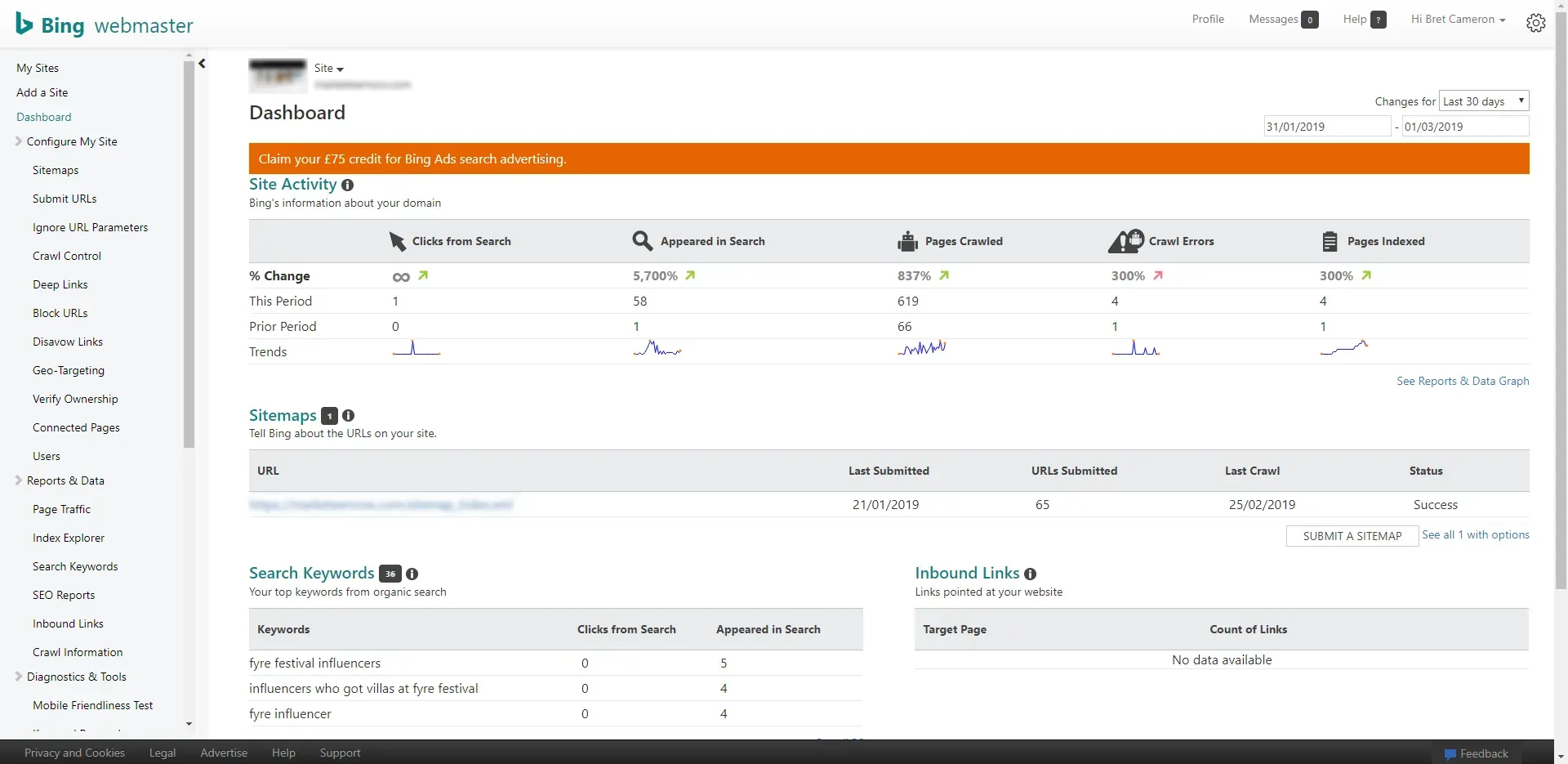

Optional: Set-up Bing Webmaster Tools

Whether or not you feel the need to add Bing Webmaster Tools to your site may depend on its size. In February 2019, Bing’s market share of search traffic was just 2.4%, compared to Google’s 92.9%.

Still, Microsoft’s Bing is the second largest search engine in the world, and it will certainly never hurt to include Bing in your suite of SEO tools. Plus, Webmaster Tools has some useful features of its own, including a place to upload XML sitemaps to Bing, a keyword research tool, and an “SEO analyzer” (currently in beta mode) which helps you comply with best practices.

To set-up Webmaster Tools, click the link here

Similar to Google Search Console, you’ll need to add some code within your websites head tags, like this:

<!-- Bing Webmaster -->

<meta name="msvalidate.01" content="FED2AB6487024C823AB7433FG2F01D63" />

A screenshot of Bing’s Webmaster dashboard, showing data from one of my newer websites. It looks like I have some crawl errors to fix!

Get an SSL Certificate

SSL stands for ‘Secure Sockets Layer’. It is used to establish an encrypted link between a web server and a browser, and it makes sure that any data passed between the web server and browsers remain private.

You can tell if a website has an SSL certificate because the URL begins with HTTPS:// rather than HTTP://. Google has stated that security online is one of its top priorities, and so whether or not a site has an SSL certificates has been made a ranking signal in its search algorithms. For that reason, it is highly recommended that you acquire an SSL certificate for your site.

Increasingly, HTTPS is becoming standard on the web, and your site may already have an SSL certificate. But if not, one of the best places to find out how to get one is using Google’s official guide.

Image Credit: Paramount Web

Create a robots.txt File

Search engines use crawlers — sometimes called “robots”, “bots” or “spiders” — to gather information about websites on the internet. Part of improving your technical SEO involves controlling what information you give to these crawlers.

Every time crawlers visit a website, they look for a file called robots.txt. This file is predominantly used to tell crawlers which pages not to crawl. For example, you might not want crawlers to access content that can only be accessed by users who have logged in. To do this, you simply write Disallow: followed by URL string to be ignored, such as: Disallow: /dashboard/.

You can also specify a user agent, allowing you to define specific behaviour for specific crawlers. So, for example, a series of rules for Google’s crawler should begin with User-agent: googlebot, while rules for Bing’s crawler should be preceded by User-agent: bingbot.

To specify rules for all user-agents except the ones you’ve named, you can use User-agent: *. A simple robots.txt file might look like this:

User-agent: *

Crawl-delay: 120

Sitemap: /sitemap.xml

Disallow: /dashboard/

Disallow: /static/

Disallow: /api/

Disallow: /embed/

It may protect sensitive pages, but what has robots.txt got to do with search engine optimisation? As in the example, we can use robots.txt to direct them to your sitemap.

Create an XML Sitemap

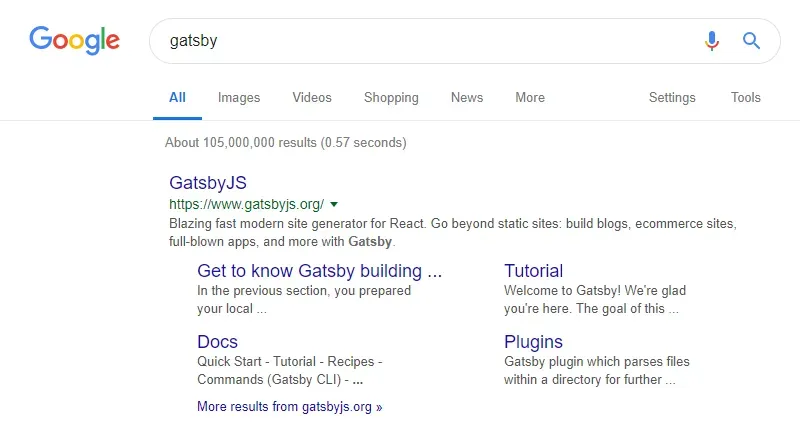

An XML sitemap allows search engine’s web crawlers to understand the layout of your website, and navigate it more easily. A sitemap is also a very useful tool if you want Google to show sitelinks for your website, the sub-headings which point users to specific pages.

This screenshot shows four sitelinks in the search result for GatsbyJS

XML is simply a mark-up language often used to represent data structures. Unlike robots.txt, whose name is fixed, you can name your sitemap whatever you like, though sitemap.xml is most common.

There are many ways to automatically create XML sitemaps. Many CMS systems have plugins to do the job (like Yoast for WordPress), and there’s a XML Sitemap Module for React. Google has some useful advice about building a sitemap, including in some less common formats.

If you’d like to create your sitemap by hand, or you just want to understand what’s going on under the hood, here’s what your XML code might look like for a site with just one URL:

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9" xmlns:news="http://www.google.com/schemas/sitemap-news/0.9" xmlns:xhtml="http://www.w3.org/1999/xhtml" xmlns:mobile="http://www.google.com/schemas/sitemap-mobile/1.0" xmlns:image="http://www.google.com/schemas/sitemap-image/1.1" xmlns:video="http://www.google.com/schemas/sitemap-video/1.1">

<url>

<loc>https://www.bretcameron.com</loc>

<lastmod>2024-08-02T21:15:56.626Z</lastmod>

<changefreq>weekly</changefreq>

<priority>1</priority>

</url>

</urlset>

</sitemap>

</sitemapindex>

To ensure your sitemap gets crawled, upload it as a page to your site and include it in your robots.txt file, as described above. You can also submit your sitemap directly to Google and Bing using the Search Console and Webmaster Tools, respectively; this is useful way to check your sitemap is working, as these services will point out if there are any errors.

Add Structured Data for Rich Results

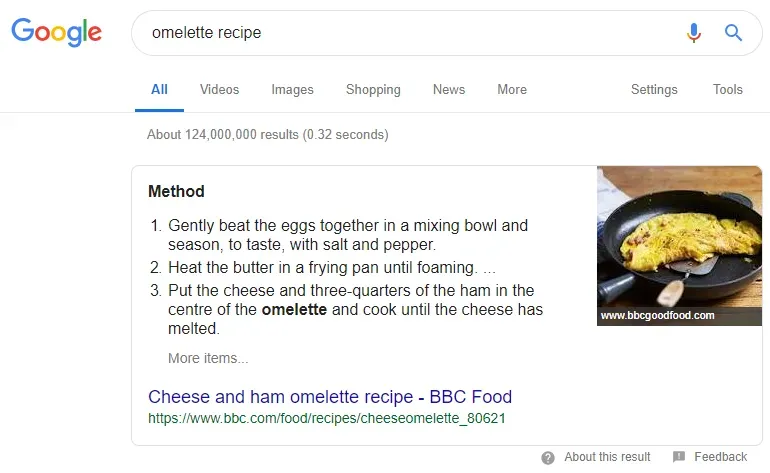

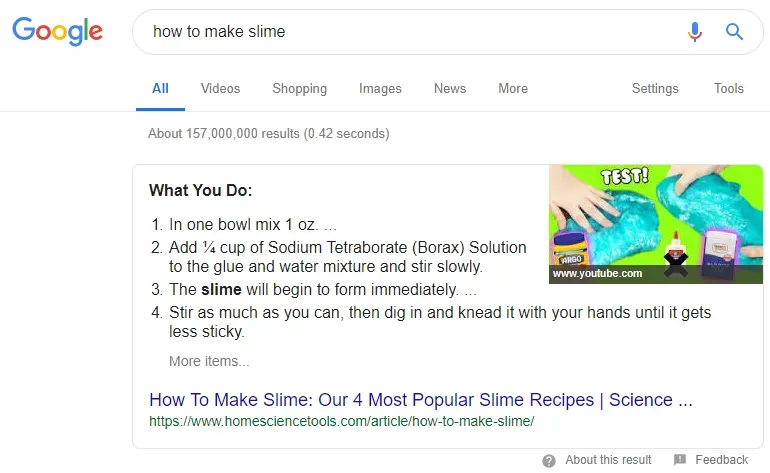

The images below are examples of “rich results” or “rich snippets”:

In many respects, rich results are the best outcome you could hope for from SEO. They are much more eye-catching than the rest of the results on the page, and featured snippets (single snippets given priority at the top of the page) have been reported to increase page views by over 500%.

But getting your site into rich results is difficult. Not only do you need to be in the running for the top several results for any given search term, but to even be eligible for rich results, you need to mark up certain elements on your page using Structured Data.

Structured Data comes in several different formats, and it allows Google and other search engines to better understand the content of your page. Regardless of format, every Structured Data format shares the same basic definitions, which can be found on schema.org.

JSON-LD

The recommended method for adding structured data to your website is using JSON-LD, because it is the easiest to implement and maintain.

You simply insert it as a script on each of your HTML pages, using <script type="application/ld+json>.

Here’s what JSON-LD looks like in practice. I’ve taken a small section from the JSON-LD used on the official website of the Oscars:

<script type="application/ld+json">

{

"@context": "http://schema.org/",

"@type": "Organization",

"name": "Oscars",

"url": "https://oscar.go.com",

"logo": {

"@type": "ImageObject",

"url": "https://oscar.go.com/images/91Oscars-Logo.png",

"width": 166,

"height": 48

},

"sameAs": [

"//www.facebook.com/TheAcademy",

"//instagram.com/theacademy/",

"//twitter.com/theacademy",

"//www.youtube.com/user/Oscars",

"//plus.google.com/+Oscars/posts"

]

}

</script>

The keys you see above — such as "@context", "@type" and "name" — are all defined in the schema.org website.

There are pre-defined categories for lots of different types of content, each with their own standard set of properties. See, for example, Articles, Movies and Photographs. Another useful site, https://jsonld.com/, contains lots of examples of JSON-LD mark-up. And often, if you check a popular website in your site’s niche, you’ll be able to see the mark-up they’re using in their source code, and use it as inspiration for your own site.

Adding Dynamic Values to JSON-LD

Often, you’ll want to add dynamic data to your JSON-LD. How exactly you do this will depend on the language(s) you are using on your site, and how they prefer to create JSON objects.

For object oriented languages, the usual method involves creating a dynamic object in your language of choice, and then converting it to JSON using an inbuilt method, such as json_encode() in PHP, json.loads() in Python, or JSON.stringify() in JavaScript.

Microdata and RDFa

Microdata and RDFa are alternatives to JSON-LD. They are a bit more time-consuming to implement, and though microdata used to be Google’s preferred method, they now recommend JSON-LD.

For that reason, I won’t discuss these alternatives in any detail. If you’d like to find out more about them, click here to learn about microdata and click here to learn about RDFa.

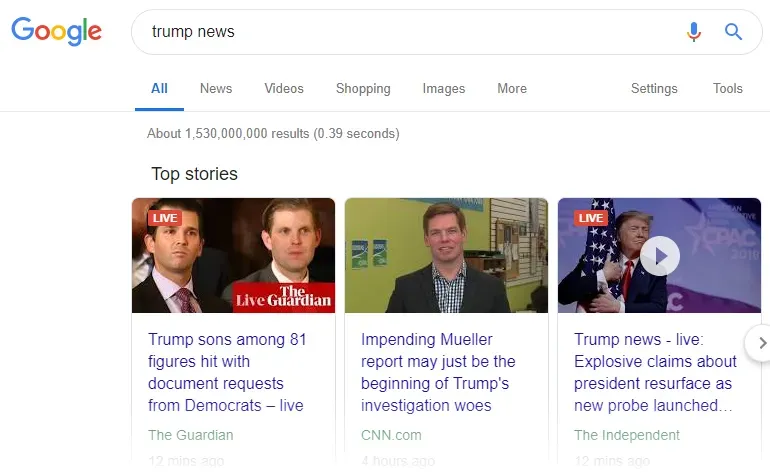

Check Your Structured Data Is Working

To ensure your structured data is getting picked up by Google, you can use their Structured Data Testing Tool. Simply plug in your URL or paste in your code, and the testing tool will tell you what it can read, and any errors or warnings you ought to fix.

The homepage of The New York Times, analysed using Google’s Structured Data Testing Tool.

Make Your Site as Fast as Possible

Google has been using speed as a search signal since 2010, and there are related SEO benefits to having a faster site: it is well-known that the quicker your site loads, the longer users stay on it, and the more likely they are to click through to different pages.

These metrics — the length of time a user spends on your site and CTR (Click-Through Rate) — are both ranking signals.

This topic could be an article, or even an entire book of its own, but here are some speed-enhancements to get you started:

- Optimise image sizes, and using lazyloading.

- Upgrade to a faster DNS provider and a faster hosting provider.

- Compress your files with a tool such as GZIP.

- Minify your files for the build version of your site.

- Reduce the number of HTTP requests to your server, by only using scripts and plugins where necessary.

Ensure Your Site is Mobile-Friendly

In 2018, mobile phones account for roughly 50% of all web traffic. In March 2018, Google announced the websites which followed their best practices for mobile would be migrated to a mobile-first listing. That means that your site, without optimising your site for mobile, you will be far less visible to as much as half the traffic on the internet.

To do this, you need to make sure that your layout is easy-to-read on mobile, without any elements blocking others or disappearing from the screen. Google is also adamant that your mobile site should contain the same content as your desktop site — and that includes structured data and metadata, as well as the visible content on your site.

To find out more, and to see a full list of Google’s recommendations, click this link.

Optional: Enable AMP

The AMP (“Accelerated Mobile Pages”) Project has received backing from Google, and it was founded in effort to create web pages that load as quickly as possible on mobile — as well as providing an all-round smoother mobile experience.

The best way to learn about AMP is using their official website.

In brief, AMP makes use of 3 core components. These can be served exclusively to mobile users. Or it can even underlie your site as a whole, if it makes sense for the desktop version; this is called “canonical AMP”.

The 3 core components are:

- AMP HTML — a restricted form of HTML, whose restrictions make sure it works as well as possible with the other tools.

- AMP JS — a JavaScript library that implements AMP’s best practices for performance, and which manages resource loading to ensure pagae renders as quickly as possible.

- The Google AMP Cache — this fetches AMP pages and caches them. It also ensures that only valid AMP pages are distributed to users.

The use of AMP is evidence to Google that your website is optimised for mobile, and the related speed benefits are also useful — as speed is a ranking signal for SEO.

The reason AMP is optional is that the tool itself isn’t integral to successful SEO. However, there are few other ways to make your mobile site as fast and responsive, so if you’re serious about mobile SEO, it’s something you may want to consider.

Attend to Smaller Fixes

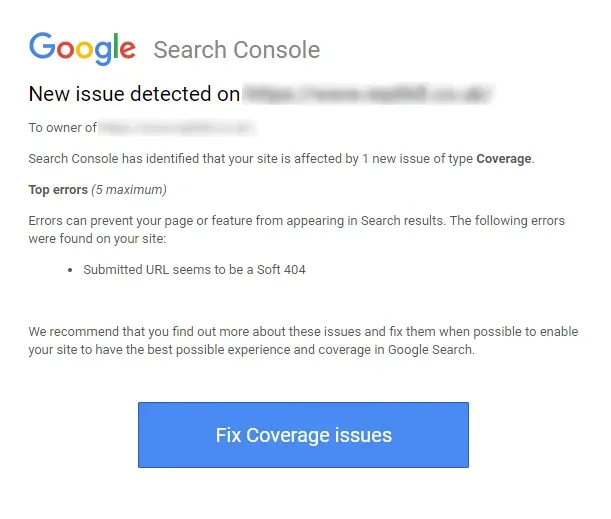

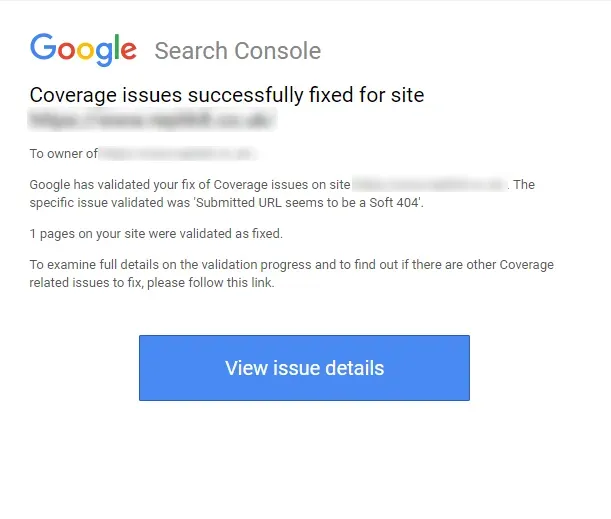

Finally, there are a whole host of smaller fixes that you can implement to ensure that your technical SEO is as good as possible. If you’ve set-up Google Search Console and Bing Webmaster Tools correctly, they will tell you as soon as they’ve identified an error while crawling your site, and give you suggestions to fix it.

Search Console recently drew attention to a Soft 404 on one of my sites

A soft 404, for example, is a URL that returns a page telling the user that the page does not exist, without an appropriate code (such as 404 ‘Not Found’ or 410 ‘Gone’).

Duplicate Content

One of the more serious “smaller fixes” is to remove any duplicate content. In the early days of search engines, some black hat SEO marketers used duplicate content to trick search engines into thinking their website was more relevant. As a result, there are cases in which Google and Bing penalise sites for having suspicious-looking duplicate content.

Where there are multiple copies of a single page for legitimate reasons — for example, mobile or printer-friendly versions of a page — you have a couple options to make sure it is not incorrectly identified as duplicate content:

- Canonical Links: You could use canonical link element, to tell search engines which URL is preferred, using markup in the head of each page, such as:

<link rel="canonical" href="https://example.com/page.php" />

- 301 Redirects: At least one Google engineer, Matt Cutts, has suggested that search engines prefer 301 redirects to canonical links. Although it’s rare, crawlers can choose to ignore canonical links, so a 301 redirect is more authoritative. You can implement 301 redirects in an

.htaccessfile, like so:

redirect 301 /old_file.html /new_file.html

Conclusion

Ultimately, most of a search engine’s decisions about how to rank search results come down to the content on your site and how relevant it is to the search term in-question.

However, technical SEO is a very important part of the overall SEO puzzle — one which non-developers might struggle to carry out. Not only does technical SEO offer the chance for sitelinks, rich results and featured snippets, but technical issues have ranking signals of their own, including page-speed, mobile-optimisation and more.

Related articles

You might also enjoy...

Focus Doesn’t Scale

How to multitask when you’re wired for deep work

4 min read

Jul 2025

Are We Letting AI Code for Us — and Killing Our Skills?

The trade-off between mastery and speed

3 min read

Jun 2025

My experience transitioning from individual contributor to team lead

Thoughts on moving from engineer to manager

4 min read

Jun 2025